A strong technical SEO audit lays the groundwork for long-term website performance. By reviewing how your site is crawled, indexed, structured, and secured, you can uncover hidden technical issues that impact your visibility in search results. This checklist guides you through essential areas—like crawlability, site speed, structured data, and mobile responsiveness—so you can build a search-engine-friendly website that performs well and delivers a great user experience. Think of it as routine maintenance that keeps your digital presence running at full speed.

Key Takeaways

- A technical SEO audit identifies backend issues that affect search rankings and site performance.

- Validating robots.txt and XML sitemaps ensures that crawlers can reach your most valuable content.

- Core Web Vitals like LCP, FID, and CLS impact both user experience and SEO.

- Structured data markup improves search visibility and can enable rich results.

- Mobile responsiveness and cross-browser compatibility are essential for SEO and user retention.

- Regular audits (every 3–6 months) help you stay ahead of indexing, speed, and security problems.

Laying the Groundwork: Fundamentals of Your Technical SEO Checklist

Before diving into technical audits, it’s essential to establish a solid foundation. A well-structured technical SEO checklist begins with a clear understanding of your site’s current performance, well-defined objectives, and a roadmap for technical improvement. The purpose of this groundwork is to set measurable goals such as increasing crawl efficiency, accelerating page load times, and improving mobile usability—all of which directly impact organic visibility and user satisfaction.

Define Clear Objectives for Your Technical SEO Audit

Begin by outlining the specific outcomes you want to achieve. These could include reducing crawl errors, enhancing Core Web Vitals scores, eliminating duplicate content issues, or improving site structure for better indexation. Having concrete goals—like decreasing server response time by 30% or improving mobile usability scores to 100% in Google Search Console—ensures that your audit remains focused and that fixes are tied to business impact. Objectives should align with broader KPIs such as higher organic traffic, improved keyword rankings, and better engagement metrics.

Use the Right Tools for Accurate Diagnostics

An effective technical audit depends on having access to reliable and comprehensive SEO tools. Each tool plays a unique role:

- Google Search Console: Monitors indexation, mobile usability, and structured data errors.

- Google Analytics (GA4): Tracks engagement metrics, user behavior, and performance trends.

- Screaming Frog SEO Spider: Crawls websites to detect broken links, redirect chains, duplicate content, and meta tag issues.

- Sitebulb / JetOctopus / DeepCrawl (alternative enterprise tools): Offer detailed crawl visualization and JavaScript rendering insights.

Using a mix of tools ensures you’re not missing blind spots—especially when dealing with large, dynamic, or JavaScript-heavy sites.

Understand How Search Engines Crawl and Index Your Site

A major component of technical SEO is ensuring that search engines can discover and understand your content. Crawlers like Googlebot scan your site’s code, follow internal links, and execute scripts to access dynamic content. Understanding this behavior helps you diagnose issues such as crawl traps, blocked resources, or rendering problems. It’s also important to differentiate between crawlability (whether bots can access a page) and indexability (whether that page is eligible to appear in search results). Pay close attention to directives in robots.txt, meta robots tags, and HTTP response codes, as these all influence visibility.

Ensure Proper Access to Analytics and Search Console

Data is the backbone of any audit. Make sure your team has full access to Google Search Console, GA4, and other analytics dashboards. These platforms help you:

- Evaluate the impact of technical changes on traffic and behavior.

- Identify patterns in user drop-offs, device issues, or slow-loading pages.

- Monitor real-time alerts related to crawling, indexing, mobile performance, and security.

Without access to these tools, technical issues may go undetected and undermine SEO performance over time. Timely access ensures rapid identification and resolution of problems, enabling a more agile SEO process.

Ensuring Discoverability: A Crawlability-Focused Technical SEO Checklist

Crawlability is the backbone of search visibility. If search engines can’t access your pages, they can’t index or rank them. A well-executed crawlability audit ensures that all important content is discoverable by bots like Googlebot and that technical barriers don’t obstruct your visibility in organic search results. This phase of a technical SEO audit focuses on verifying how effectively your site communicates with search engines and guides their crawling behavior.

Validate Your Robots.txt File and Crawl Directives

The robots.txt file tells search engine crawlers which parts of your website they’re allowed to access. However, even a small misconfiguration—such as an accidental Disallow: /—can prevent entire sections of your site from being indexed. During your audit:

- Check that no critical pages are unintentionally blocked.

- Use tools like Google Search Console’s robots.txt Tester or Screaming Frog’s robots audit feature.

- Confirm that disallow directives only target low-value or admin areas (e.g., /wp-admin/, /cart/, etc.).

Consistently maintaining this file ensures that crawlers spend their time efficiently and prioritize your most valuable content.

Audit XML Sitemaps for Completeness and Accuracy

Your XML sitemap acts as a roadmap for search engines. It should include all canonical, indexable URLs that you want to appear in search results. An effective sitemap audit includes:

- Verifying that the sitemap is submitted in Google Search Console.

- Ensuring it contains only live (200 status), non-canonical, and non-blocked URLs.

- Removing redirects, broken links, or noindex-tagged pages from the sitemap.

- Checking for correct formatting and avoiding unnecessary sitemap index nesting.

A clean and regularly updated sitemap improves indexing coverage and signals your site’s structure clearly to search engines.

Identify and Resolve Crawl Errors

Crawl errors, such as broken links (404), soft 404s, server errors (5xx), and redirect loops, hinder both user experience and bot navigation. Left unaddressed, they waste crawl budget and send negative signals to search engines.

- Use the Coverage Report in Google Search Console to monitor crawl anomalies.

- Use log file analysis or tools like Screaming Frog or Sitebulb to identify broken internal and external links.

- Prioritize fixes based on impact—start with URLs linked in your sitemap, navigation, or high-traffic content.

Quick resolution of crawl errors helps preserve authority, ensures uninterrupted bot access, and improves user flow.

Monitor Indexation Trends in Google Search Console

Crawlability alone isn’t enough—pages also need to be indexed. Use the Page Indexing report in Google Search Console to:

- Identify how many submitted URLs are indexed.

- Detect “Discovered – currently not indexed” or “Crawled – not indexed” status, which may signal rendering or quality issues.

- Compare expected vs. actual indexation to spot discrepancies.

Monitoring indexation trends ensures that your content is making it into search results and reveals early signs of technical trouble.

Analyze Server Log Files for Search Bot Behavior

Server logs are a goldmine of SEO insight. They show you exactly how search engine crawlers interact with your website—what they crawl, how often, and where they encounter problems.

Key things to examine in log files:

- Crawl frequency across critical vs. low-priority pages.

- Repeated hits on error pages or blocked resources.

- Crawl budget distribution for large or dynamic sites.

By analyzing these patterns, you can adjust internal linking, improve crawl paths, and ensure Googlebot prioritizes your most valuable content.

Structuring for Success: Auditing Site Architecture and Internal Linking

A strong site architecture and well-planned internal linking are foundational elements of technical SEO. They guide both search engine crawlers and users through your content in a logical, efficient way. When properly executed, these structural elements help distribute link equity across your website, improve crawl efficiency, and elevate the overall user experience. A clear hierarchy also makes your content more discoverable, especially for large or complex websites.

Evaluate URL Structure for Clarity and Relevance

A clean URL structure improves both usability and crawlability. During your audit:

- Ensure URLs are short, descriptive, and include target keywords where appropriate.

- Remove unnecessary parameters, session IDs, or tracking strings that create duplicate URLs.

- Use hyphens to separate words and avoid underscores, spaces, or mixed cases.

- Maintain a consistent format that mirrors your site’s content hierarchy.

Search engines and users alike benefit from readable, SEO-friendly URLs that clearly communicate the purpose of a page.

Assess the Logic and Depth of Your Site Navigation

Site navigation should be intuitive, organized, and shallow enough to allow important content to be reached within three clicks or fewer. Audit your menu and navigation structure by checking:

- Whether main categories and subcategories follow a logical flow.

- If users can easily move from broad content topics to specific information.

- Whether all key pages are accessible from the homepage or major landing pages.

A shallow, well-structured navigation not only improves user experience but also ensures deeper pages are easily discoverable by search engine crawlers.

Audit Internal Linking Strategy to Guide Authority Flow

Internal links help search engines understand the relationship between pages and determine which content is most important. An effective internal linking audit should include:

- Evaluating anchor text to ensure it’s descriptive and relevant.

- Ensuring important pages (e.g., product pages, service pages, or cornerstone content) receive sufficient internal links.

- Identifying orphan pages—pages without any internal links—and integrating them into your linking strategy.

Strategic internal linking enhances crawl paths, improves topic relevance, and boosts the SEO value of high-priority content.

Check for Proper Implementation of Breadcrumb Navigation

Breadcrumbs serve as secondary navigation and reinforce your site’s hierarchy. They:

- Help users orient themselves within your site.

- Create additional internal links for bots to crawl.

- Improve usability and reduce bounce rates.

During the audit, confirm that breadcrumbs are implemented consistently and marked up with appropriate structured data (BreadcrumbList schema) to enhance search result snippets.

Ensure a Clear and Hierarchical Information Structure

An effective content hierarchy allows users and crawlers to understand which content is most important and how different pages relate to one another. Look for:

- A logical outline of parent and child pages.

- Proper use of heading tags (H1-H6) that mirror the content structure.

- Clarity in how content flows from top-level topics to detailed subtopics.

A clear information hierarchy improves crawlability, content comprehension, and overall site UX—all of which contribute to stronger organic performance.

On-Page Technical Elements: Refining Core SEO Signals

On-page technical elements bridge the gap between your content and how search engines interpret it. Optimizing these foundational components ensures that your site is both user-friendly and semantically structured for search visibility. A focused audit of these elements improves clarity, boosts relevance signals, and enhances how your pages appear in search results.

Audit Title Tags and Meta Descriptions for Optimization

Title tags and meta descriptions are critical for signaling the relevance and intent of each page. They appear directly in search results and influence both rankings and click-through rates (CTR). During your audit:

- Ensure every page has a unique, descriptive title tag (typically under 60 characters).

- Optimize meta descriptions (under 155–160 characters) to summarize the content and include target keywords naturally.

- Avoid duplication and keyword stuffing; prioritize clarity and alignment with user intent.

Well-crafted metadata improves indexability and helps your listings stand out in the SERPs.

Verify Heading Tag Usage and Hierarchy

Heading tags (H1 through H6) provide structure and guide both users and search engines through your content. An effective audit should:

- Confirm that each page uses one <h1> that clearly defines the main topic.

- Use subheadings (<h2>, <h3>, etc.) in logical sequence to break up content.

- Avoid skipping heading levels or using them for purely visual styling.

Proper heading structure enhances readability and helps search engines parse content topics and relationships.

Examine Image Optimization and Alt Attributes

Images are powerful content enhancers but must be optimized for performance and accessibility. Focus your audit on:

- Reducing file sizes (preferably under 100 KB for most web images) without compromising visual quality.

- Using appropriate formats like WebP or compressed JPEG/PNG.

- Ensuring every image includes descriptive, keyword-relevant alt text to support screen readers and image indexing.

- Implementing lazy loading where appropriate to improve page speed.

Optimized images improve user engagement and contribute positively to technical performance metrics.

Validate Structured Data Markup for Rich Result Eligibility

Structured data (Schema.org markup) helps search engines understand the context of your content. When properly implemented, it can enhance your search listings with features like star ratings, FAQs, breadcrumbs, and product information. As part of your audit:

- Use tools like Google’s Rich Results Test and Schema Markup Validator to check implementation.

- Ensure JSON-LD format is correctly embedded in the page.

- Include relevant schema types (e.g., Product, Article, BreadcrumbList, FAQPage) based on content type.

- Avoid spammy or misleading markup to remain eligible for enhanced results.

Correct structured data increases visibility and can significantly improve SERP real estate.

Detect and Resolve Duplicate or Thin Content Issues

Duplicate and thin content can harm your site’s authority and dilute keyword focus. During the audit:

- Identify pages with similar or low-value content using tools like Screaming Frog or Sitebulb.

- Apply canonical tags (rel=”canonical”) to consolidate duplicate content variations.

- Consider merging or improving thin pages with limited textual depth or unique value.

- Audit URL parameters and faceted navigation that may be generating near-duplicates.

Maintaining unique, substantial content on each indexed page strengthens topical authority and improves relevance in search results.

Boosting Performance: A Critical Element of Technical SEO and User Experience

Website performance is a cornerstone of both search engine optimization and user satisfaction. Slow-loading pages, layout instability, and poor mobile responsiveness directly affect rankings, bounce rates, and conversions. A thorough performance audit ensures your site meets modern speed standards and delivers a frictionless experience across all devices and platforms.

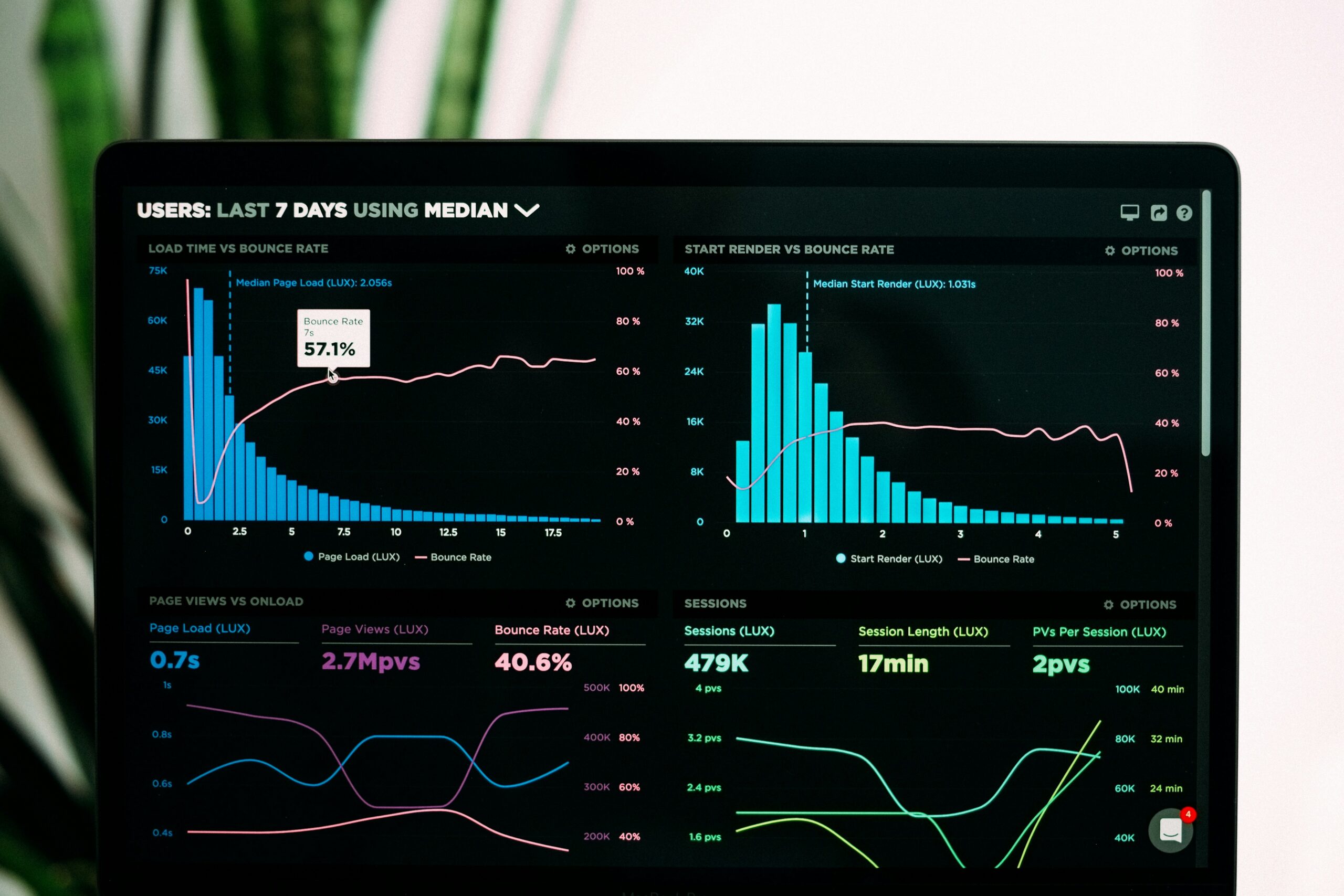

Measure Page Speed and Core Web Vitals

Page speed is not only a confirmed ranking factor but also a key driver of user engagement. Focus on auditing Core Web Vitals, Google’s performance metrics that gauge real-world user experience:

- LCP (Largest Contentful Paint): Measures loading speed; aim for <2.5 seconds.

- FID (First Input Delay): Assesses interactivity; aim for <100 ms.

- CLS (Cumulative Layout Shift): Evaluates visual stability; aim for <0.1.

Use tools like Google PageSpeed Insights, Lighthouse, and Chrome User Experience Report (CrUX) to gather and interpret this data. Prioritize improvements that reduce loading bottlenecks and streamline asset delivery.

Test Mobile-Friendliness and Responsive Design

With mobile-first indexing now standard, your website must be fully optimized for mobile devices. An audit should include:

- Testing across a range of screen sizes and devices (smartphones, tablets, desktops).

- Ensuring that menus, CTAs, and interactive elements are touch-friendly and properly scaled.

- Verifying that layouts adjust dynamically without content cut-off or horizontal scrolling.

Use tools like Google’s Mobile-Friendly Test and responsive design emulators to detect usability issues early.

Evaluate Cross-Browser and Cross-Device Compatibility

Your website must offer a consistent experience regardless of browser or operating system. Audit for:

- Visual rendering issues or layout shifts in Chrome, Firefox, Safari, Microsoft Edge, and other major browsers.

- Functional discrepancies (e.g., broken forms or buttons) across desktop and mobile operating systems.

- JavaScript errors or CSS inconsistencies that vary across environments.

Testing your site in multiple browsers and devices ensures accessibility for all users and eliminates preventable technical friction.

Identify Code Optimization and Minification Opportunities

Excessive or unoptimized code can weigh down your site and increase page load times. As part of your audit:

- Minify HTML, CSS, and JavaScript files by removing whitespace, comments, and unused code.

- Consolidate stylesheets and scripts to reduce HTTP requests.

- Eliminate render-blocking resources from the critical rendering path.

Use Lighthouse, GTmetrix, or WebPageTest to identify bulky scripts and monitor their impact on performance.

Review Caching Strategy and CDN Configuration

Proper caching and a well-configured Content Delivery Network (CDN) are essential for delivering content quickly, especially to users in different regions. During your audit:

- Check server response headers for proper cache-control settings and expiration dates.

- Ensure static assets (e.g., images, fonts, CSS) are cached efficiently.

- Verify that your CDN is correctly distributing content based on geographic proximity to minimize latency.

These improvements reduce server load and enable faster, more reliable content delivery.

Maintaining Site Health: Auditing Security, Internationalization, and Reporting

A technically sound website must be secure, properly geo-targeted, and consistently monitored. Ongoing checks in these areas ensure SEO performance, long-term user trust, and global reach. This technical SEO audit phase reinforces your site’s structural and operational integrity across regions and platforms.

Confirm HTTPS Implementation and SSL Integrity

Using HTTPS is a baseline security requirement and a confirmed Google ranking signal. During your audit:

- Ensure that a valid SSL certificate is installed and renewed automatically.

- Test for mixed content issues, where secure pages load insecure resources.

- Redirect all HTTP versions to HTTPS using 301 redirects to preserve link equity.

A fully encrypted site protects user data, builds trust, and meets modern search engine expectations for secure browsing.

Check for Common Website Security Vulnerabilities

Security breaches not only harm user trust—they can also lead to deindexation or search engine warnings. An SEO audit should include:

- Scanning for threats like SQL injection, cross-site scripting (XSS), and malware using tools such as Sucuri, SiteLock, or manual pentesting.

- Ensuring that software, plugins, and CMS platforms are regularly updated.

- Validating proper use of HTTP security headers (e.g., Content-Security-Policy, X-Frame-Options).

Proactive security audits minimize exposure to attacks and help maintain consistent site uptime and search visibility.

Audit Hreflang Tags for International Targeting Accuracy

For multilingual or region-specific websites, correctly implemented hreflang attributes ensure the right version of your content appears to the appropriate audience. During the audit:

- Verify that each hreflang tag matches the correct language-region format (e.g., en-us, fr-ca).

- Ensure every alternate version includes reciprocal hreflang annotations.

- Check for implementation consistency across canonical URLs.

Correct hreflang usage prevents duplicate content issues across regions and improves the user experience for international audiences.

Verify Canonical Tag Usage to Consolidate Duplicate Content

Canonical tags help search engines identify the primary version of a page when similar or duplicate content exists across different URLs. To audit effectively:

- Confirm that self-referencing canonicals are used where appropriate.

- Ensure that variations (e.g., URL parameters or filtered results) point to a single, preferred version.

- Avoid conflicting signals by aligning canonical tags with robots directives, hreflang annotations, and sitemap entries.

Proper canonicalization consolidates link equity, prevents indexing of duplicate content, and improves crawl efficiency.

Compile a Clear, Actionable Audit Report

Once the audit is complete, organize your findings into a prioritized implementation plan. The final report should:

- Highlight issues by category: critical (urgent fixes), moderate (recommended changes), and low priority (monitor).

- Include technical evidence (screenshots, crawl data, validation errors) for each issue.

- Provide actionable next steps, estimated effort, and potential SEO impact.

This roadmap not only guides immediate optimizations but also establishes a framework for ongoing site maintenance and performance tracking.

Technical SEO Checklist Summary

This checklist reflects the technical foundation that supports strong SEO performance. Each component plays a role in improving discoverability, protecting users, and sustaining long-term site health.

Frequently Asked Questions

What is a technical SEO checklist?

It’s a step-by-step list of technical elements to review and fix—like crawl errors, mobile issues, or page speed—to make sure your website is search-engine friendly and easy to navigate.

Why is validating the robots.txt file important?

It helps search engines know which pages to crawl or skip. Incorrect rules in this file can block important content from appearing in search results.

How do XML sitemaps benefit SEO?

They act as a roadmap for search engines, making it easier for crawlers to find and index key pages on your site.

What is the impact of page speed on SEO?

Fast-loading pages improve user experience and are favored by search engines. Poor Core Web Vitals can lead to lower rankings and higher bounce rates.

How does structured data help search visibility?

Structured data helps search engines understand your content better. When implemented correctly, it can improve your appearance in search with rich snippets like ratings, FAQs, or breadcrumbs.

Why is HTTPS essential for websites?

HTTPS ensures that data passed between your site and its users is encrypted and secure. It also acts as a ranking signal for search engines.

How often should a technical SEO audit be done?

You should perform a technical SEO audit every 3 to 6 months—or immediately after any major changes to your website.

What does canonicalization do in SEO?

It helps search engines understand the preferred version of a page when duplicate or similar content exists across multiple URLs.

Why is log file analysis useful in SEO?

It shows how search engine bots interact with your site, revealing crawl frequency, skipped pages, or wasted crawl budget.

Final Thoughts

A well-structured technical SEO audit ensures that your website is fully accessible, fast, and properly optimized for search engines and users alike. From resolving crawl errors and validating structured data to improving site speed and mobile performance, every step in this checklist strengthens your site’s foundation. Regular audits not only fix current issues but also help you stay ahead of search algorithm changes. By making technical SEO part of your ongoing site maintenance, you’ll protect your rankings and create a better experience for every visitor.